In this article let’s try to integrate OpenTelemetry and Jaeger into a multiple microservices system using:

Sprkl’s backend stack is composed of Go and NestJS (TS framework for Node) services, where the Go services usually handle heavy data and computational tasks. The NestJS services take the control plane. Our service-2-service communication is over gRPC, utilizing a lot of the streaming capabilities of the protocol. At Sprkl, we allocate our efforts to make our services observable by adding targeted tracing on new features and various metrics on the APIs. Our observability tracing stack is OpenTelemetry and Jaeger. I thought you might find the OpenTelemetry and Jaeger setup project insightful, so I decided to share it.

Let’s start with the following:

Find full source code in the GitHub repository

We recently decided to build new control services with NestJS, and we wanted to implement the same observability that we had in our Go services. This NestJS service had a streaming API to handle some events that were targeted towards this service. Unfortunately, the streaming API stopped working once I implemented the required steps to instrument the NestJS service. I found a bug in the gRPC instrumentation library that I resolved: Here.

I wanted to write this blog post to share a raw and basic configuration for NestJS with OpenTelemetry and Jaeger, which can take some time to setup. So now, let’s see how to initialize a basic NestJS gRPC service and add OpenTelemetry instrumentation to get some observability.

Now, let’s integrate OpenTelemetry and Jaeger into a backend system

Requirements

To get started with NestJS let’s install their CLI first.

npm i -g @nestjs/cliNow we can initialize an empty project.

nest new nestjs-jaeger-example && cd nestjs-jaeger-examplegRPC by works by default with the protobuf protocol, which enables you to define language-agnostic schemas for your APIs. Protobuf not only defines the data schema but also the service schema, which is very powerful. This is a great way to decouple the API definition from the client and server implementation, and it shines in multi-language backend environments.

Let’s create our protobuf under proto/example/v1/example.proto, with the following directory structure:

|___ src

|___ test

|___ proto

|___ package.json// proto/example/v1/example.proto

syntax = "proto3";

package example.v1;

service ExampleService {

rpc GetExample(GetExampleRequest) returns (GetExampleResponse) {}

}

message GetExampleRequest {

int32 integer_data = 1;

}

message GetExampleResponse {

repeated string string_data = 1;

bool bool_data = 2;

}

To learn more about the protobuf protocol checkout the protobuf docs and gRPC docs.

Schema linting is important in an organizational setting. We will use buf for linting our protobufs.

Buf is a great tool for managing protobufs, building and linting them. The main concept is that you organize your protobufs in modules, and you can add rules to these modules. They also have declarative code generation mechanism which is a great way to reduce protoc scripts (suites other languages better).

So after setting up our protobuf schema, let’s initialize our NestJS microservice:

Back to our NestJS service. To set up a gRPC server with NestJS, I’ll use the NestJS microservices package and the grpc (grpc-js) packages.

npm i --save @grpc/grpc-js @grpc/proto-loader @nestjs/microservicesLet’s clean the initialized project and start with a basic setup.

// src/main.ts

import { NestFactory } from '@nestjs/core';

import { MicroserviceOptions } from '@nestjs/microservices';

import { AppModule } from './app.module';

import { grpcOptions } from './utils/grpc.options';

async function bootstrap() {

const app = await NestFactory.createMicroservice<MicroserviceOptions>(

AppModule,

grpcOptions,

);

await app.listen();

}

bootstrap();

// src/utils/grpc.options.ts

import { GrpcOptions, Transport } from '@nestjs/microservices';

import * as path from 'path';

import {

EXAMPLE_PACKAGE,

EXAMPLE_REL_PROTO_PATH,

} from '../example/example.proto'; // the is described down below

export const PROTO_MODULE_PATH = path.join(__dirname, '..', '..', 'proto');

export const grpcOptions: GrpcOptions = {

transport: Transport.GRPC,

options: {

package: [EXAMPLE_PACKAGE],

protoPath: path.join(PROTO_MODULE_PATH, EXAMPLE_REL_PROTO_PATH),

url: 'localhost:3000',

},

};

// src/example/example.proto.ts

import * as path from 'path';

/* These are the typescript interfaces that describe the protobuf contents */

export interface GetExampleRequest {

integerData: number;

}

export interface GetExampleResponse {

stringData: string[];

boolData: boolean;

}

export const EXAMPLE_PACKAGE = 'example.v1';

export const EXAMPLE_REL_PROTO_PATH = path.join(

'example',

'v1',

'example.proto',

);

// src/app.module.ts

import { Module } from '@nestjs/common';

import { ExampleModule } from './example/example.module';

@Module({

imports: [ExampleModule],

})

export class AppModule {}

Now based on the protobuf schema we will implement a basic controller that implements that schema. NestJS abstracts almost perfectly the gRPC protocol from their microservices package.

All we need to do is to follow the naming conventions we applied on the protobufs (or use string arguments, check this out in the docs)

// src/example/example.controller.ts

import { Controller } from '@nestjs/common';

import { GrpcMethod } from '@nestjs/microservices';

import { GetExampleRequest, GetExampleResponse } from './example.proto';

import { ExampleProvider } from './example.provider';

@Controller()

export class ExampleService {

constructor(private exampleProvider: ExampleProvider) {}

@GrpcMethod() // grpc: ExampleService/GetExample

getExample(req: GetExampleRequest): GetExampleResponse {

const resp: GetExampleResponse = {

boolData: this.exampleProvider.getExampleBool(),

stringData: [this.exampleProvider.getExampleString()],

};

return resp;

}

}

This basically concludes the server setup.

We will test it by running it with npm start, and a javascript gRPC client that will log the server responses.

Here is a test client for our server:

// test/example.client.js

const grpc = require('@grpc/grpc-js');

const protoLoader = require('@grpc/proto-loader');

const path = require('path');

const protoPath = path.join(

__dirname,

'..',

'proto',

'example',

'v1',

'example.proto',

);

const packageDefinition = protoLoader.loadSync(protoPath);

const protoDescriptor = grpc.loadPackageDefinition(packageDefinition);

const examplePackage = protoDescriptor['example']['v1'];

const exampleServiceClient = new examplePackage.ExampleService(

'localhost:3000',

grpc.credentials.createInsecure(),

);

exampleServiceClient.getExample({ integerData: 123 }, (err, resp) => {

if (err) {

throw Error(err);

} else {

console.log(JSON.stringify(resp));

}

});

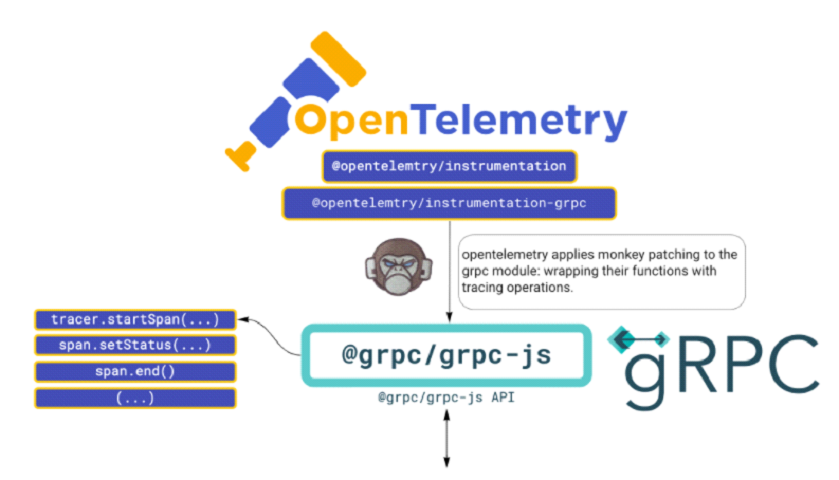

OpenTelemetryJS automatically instruments our gRPC server with spans and traces, by wrapping the gRPC modules with tracing operations. So instead of calling regular gRPC functions, we will actually call first to OpenTelemetry to start tracing this function and then to the actual function.

In OpenTelemetry 0.26.x (and earlier I think), I failed, when I was doing the integration because of a bug in their instrumentation library. The monkey patching has overridden some fields of the object returned by the gRPC API, which made this integration broken (could not create streaming servers), check out the issue: Here.

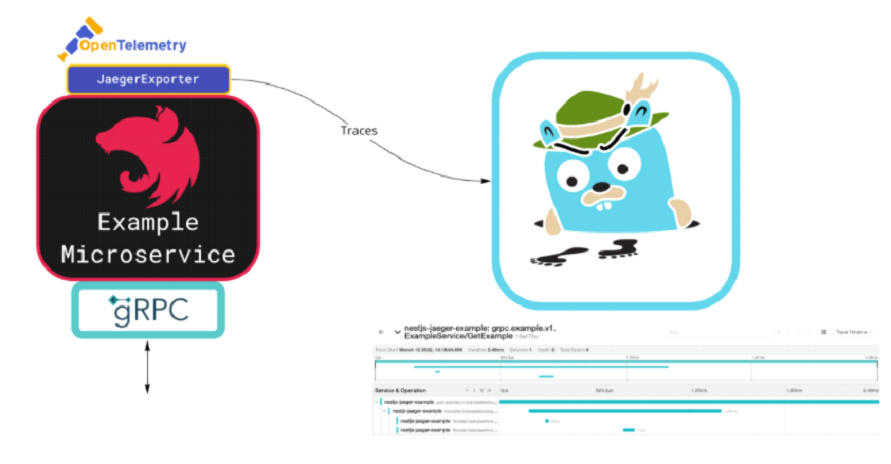

We will use OpenTelemetry’s Jaeger exporter to export the OTEL traces to a Jaeger backend service. Jaeger is a backend application for tracing (other examples are Zipkin, lightstep, etc.), which enables us to view, search and analyze traces.

For more information checkout the Jaeger docs: Here

First we need to install OpenTelemetry packages:

npm i --save @opentelemetry/{api,sdk-node,auto-instrumentations-node}

And the jaeger exporter package:

npm i --save @opentelemetry/exporter-jaegerNow we will initialize the tracing as described in their repo.

import * as process from 'process';

import * as opentelemetry from '@opentelemetry/sdk-node';

import { getNodeAutoInstrumentations } from '@opentelemetry/auto-instrumentations-node';

import { Resource } from '@opentelemetry/resources';

import { SemanticResourceAttributes } from '@opentelemetry/semantic-conventions';

import { SimpleSpanProcessor } from '@opentelemetry/sdk-trace-base';

import { JaegerExporter } from '@opentelemetry/exporter-jaeger';

export const initTracing = async (): Promise<void> => {

const traceExporter = new JaegerExporter();

const sdk = new opentelemetry.NodeSDK({

resource: new Resource({

[SemanticResourceAttributes.SERVICE_NAME]: 'nestjs-jaeger-example',

}),

instrumentations: [getNodeAutoInstrumentations()],

// Using a simple span processor for faster response.

// You can also use the batch processor instead.

spanProcessor: new SimpleSpanProcessor(traceExporter),

});

try { await sdk.start();

console.log('Tracing initialized');

} catch (error) {

console.log('Error initializing tracing', error);

}

process.on('SIGTERM', () => {

sdk

.shutdown()

.then(() => console.log('Tracing terminated'))

.catch((error) => console.log('Error terminating tracing', error))

.finally(() => process.exit(0));

});

};

The SDK basically initializes everything for you, you only need to import it in the bootstrap function and we’re good to go.

// src/main.ts

import { NestFactory } from '@nestjs/core';

import { MicroserviceOptions } from '@nestjs/microservices';

import { AppModule } from './app.module';

import { grpcOptions } from './utils/grpc.options';

import { initTracing } from './utils/tracing.init';

async function bootstrap() {

await initTracing(); // Added tracing here

const app = await NestFactory.createMicroservice<MicroserviceOptions>(

AppModule,

grpcOptions,

);

await app.listen();

}

bootstrap();

Next, let’s setup the jaeger deployment in our package.json using docker. Jaeger by default is using port 16686/tcp for UI and 6832/udp for collecting traces.

{

"scripts": {

"jaeger:start": "docker run --rm -d --name jaeger -p 16686:16686 -p 6832:6832/udp -e JAEGER_DISABLED=true jaegertracing/all-in-one:1.32",

"jaeger:stop": "docker stop jaeger"

},

}

That’s it.

Now if you start jaeger and the server, and use the testing client you will get traces on http://localhost:16686.

import { Controller } from '@nestjs/common';

import { GrpcMethod } from '@nestjs/microservices';

import { Span, SpanStatusCode } from '@opentelemetry/api';

import { getTracer } from '../utils/tracing.tracer';

import { GetExampleRequest, GetExampleResponse } from './example.proto';

import { ExampleProvider } from './example.provider';

@Controller()

export class ExampleService {

constructor(private exampleProvider: ExampleProvider) {}

@GrpcMethod()

getExample(req: GetExampleRequest): GetExampleResponse {

return (

getTracer()

// startActiveSpan not only starts a span, but also sets the span context to be the newly opened span

.startActiveSpan(

'Controller ExampleService/getExample',

(span: Span): GetExampleResponse => {

// attributes are key value pairs that can be set on a span

span.setAttribute('grpc.request', JSON.stringify(req));

// status of span determines the span status

span.setStatus({ code: SpanStatusCode.OK, message: 'all good' });

const resp: GetExampleResponse = {

boolData: this.exampleProvider.getExampleBool(),

stringData: [this.exampleProvider.getExampleString()],

};

// every span that started must be manually closed

span.end();

return resp;

},

)

);

}

}

export const initTracing = async (): Promise<void> => {

// just switch the trace exporter

const traceExporter = new ConsoleSpanExporter();

const sdk = new opentelemetry.NodeSDK({

resource: new Resource({

[SemanticResourceAttributes.SERVICE_NAME]: 'nestjs-jaeger-example',

}),

instrumentations: [getNodeAutoInstrumentations()],

spanProcessor: new SimpleSpanProcessor(traceExporter),

});

If the console exporter is working for you but the jaeger exporter is not, try figuring out if there’s any problem with the jaeger deployment, or manually config the jaeger exporter as described here.

Hope that some of you found this project insightful:)

Any thoughts? Connect with me on GitHub

Share

Enjoy your reading 9 Min Read

We promise you’ll only get notified

when new content is out

Font 1

Font 1

Font 1

Font 1

Font 1

Font 1

Font - code

Font - code